Modeling non-linear relationships

Intro to Data Analytics

Model checking

Data: Paris Paintings

Download here or from Brightspace.

- Number of observations: 3393

- Number of variables: 61

“Linear” models

- We’re fitting a “linear” model, which assumes a linear relationship between explanatory and response variables.

- How do we assess this?

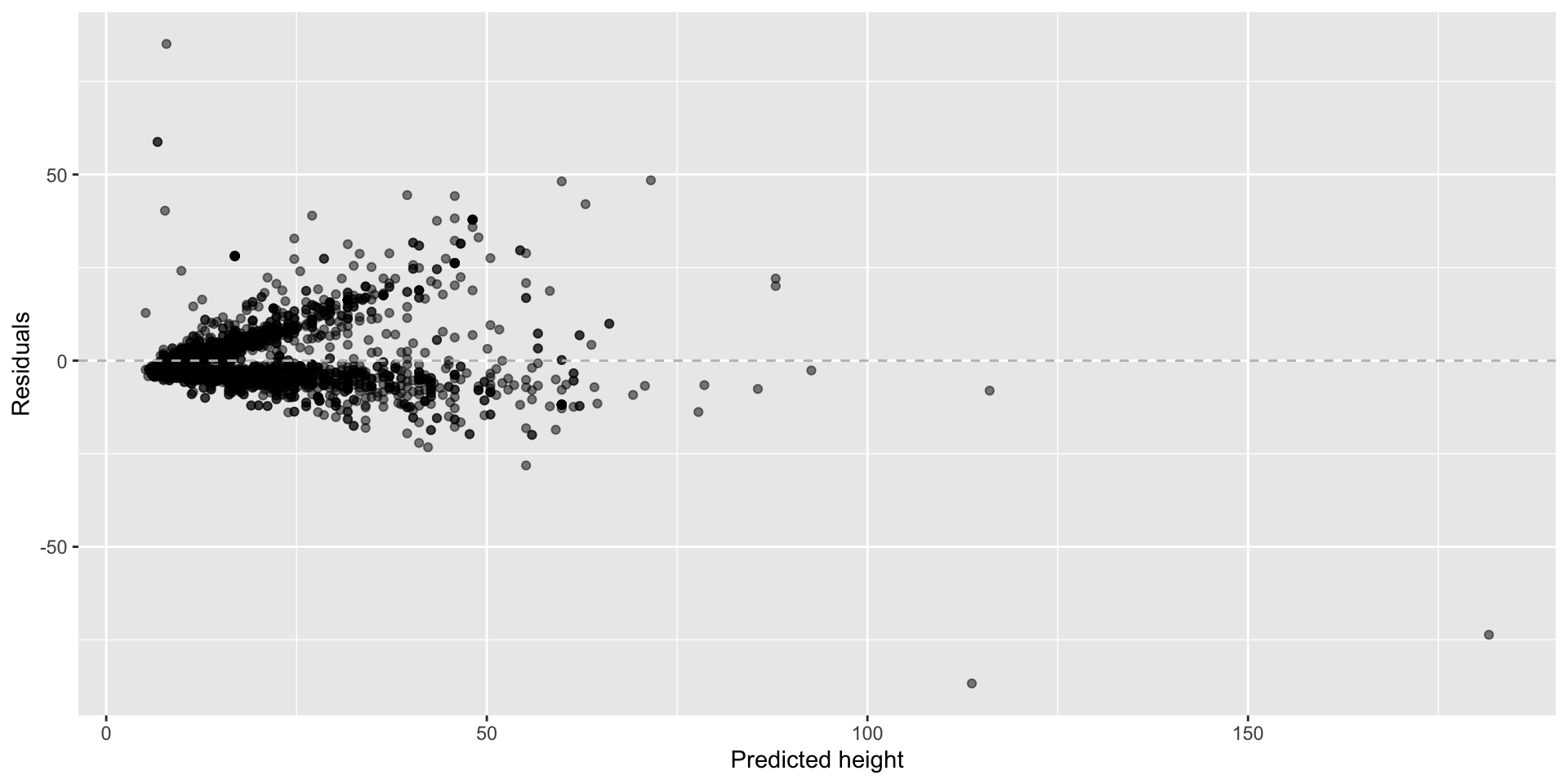

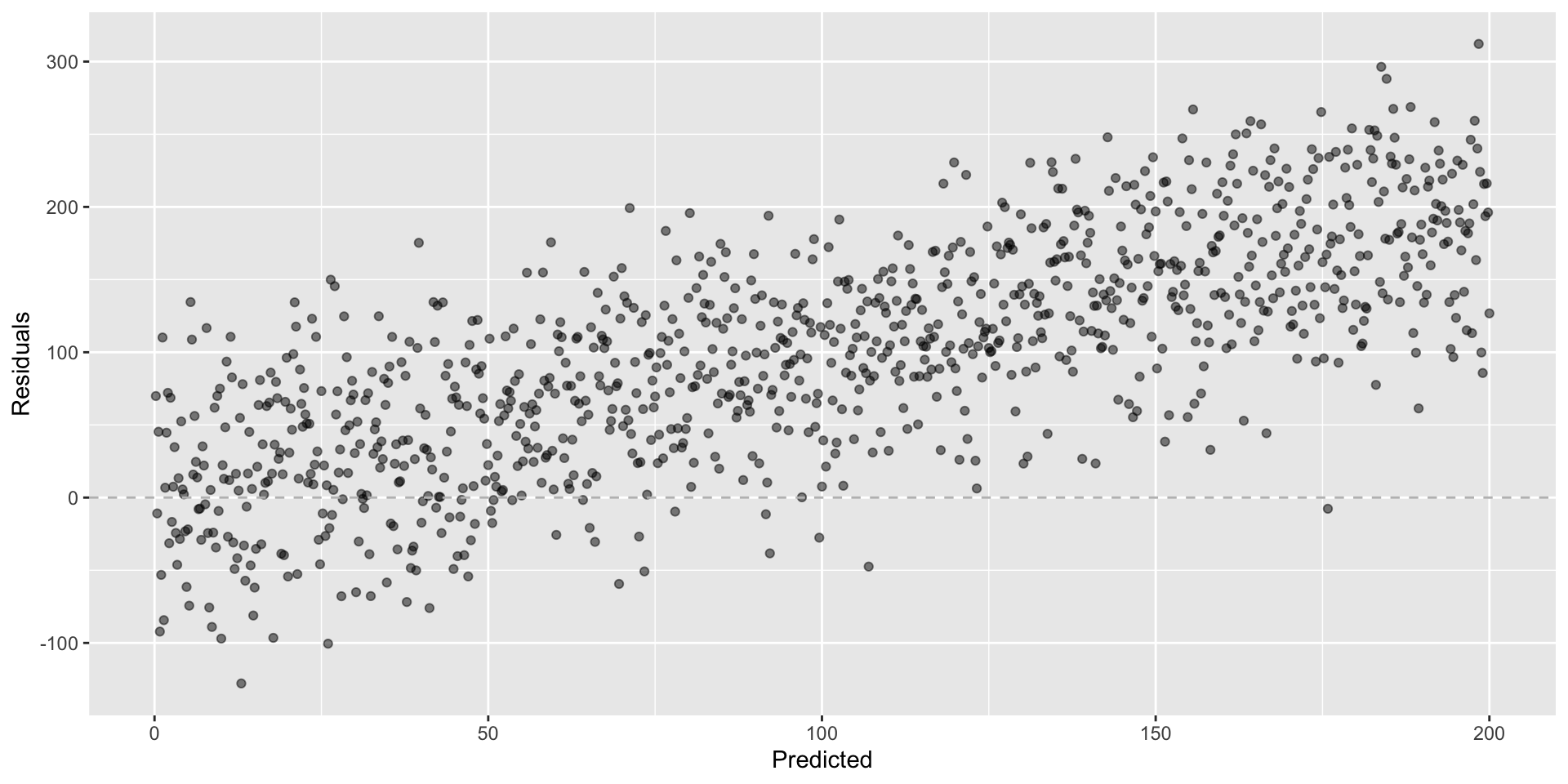

Graphical diagnostic: residuals plot

ht_wt_fit <- linear_reg() %>%

set_engine("lm") %>%

fit(Height_in ~ Width_in, data = pp)

ht_wt_fit_aug <- augment(ht_wt_fit$fit) #<<

ggplot(ht_wt_fit_aug, mapping = aes(x = .fitted, y = .resid)) +

geom_point(alpha = 0.5) +

geom_hline(yintercept = 0, color = "gray", lty = "dashed") +

labs(x = "Predicted height", y = "Residuals")# A tibble: 3,135 × 9

.rownames Height_in Width_in .fitted .resid .hat .sigma .cooksd

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 37 29.5 26.7 10.3 0.000399 8.30 0.000310

2 2 18 14 14.6 3.45 0.000396 8.31 0.0000342

3 3 13 16 16.1 -3.11 0.000361 8.31 0.0000254

4 4 14 18 17.7 -3.68 0.000337 8.31 0.0000330

5 5 14 18 17.7 -3.68 0.000337 8.31 0.0000330

6 6 7 10 11.4 -4.43 0.000498 8.31 0.0000709

7 7 6 13 13.8 -7.77 0.000418 8.30 0.000183

8 8 6 13 13.8 -7.77 0.000418 8.30 0.000183

9 9 15 15 15.3 -0.333 0.000377 8.31 0.000000304

10 10 9 7 9.09 -0.0870 0.000601 8.31 0.0000000330

# ℹ 3,125 more rows

# ℹ 1 more variable: .std.resid <dbl>Residual plot: helpful graphical diagnostic

-

augmentfitted values: model’s estimate of (predicted value of) the dependent variable for each observation.

residuals: difference between actual and predicted values.

Rows dropped due to NAs, so resulting augmented data frame will possibly have fewer rows than original data frame.

Looking for…

- Residuals distributed randomly around 0

- With no visible pattern along the x or y axes

Not looking for…

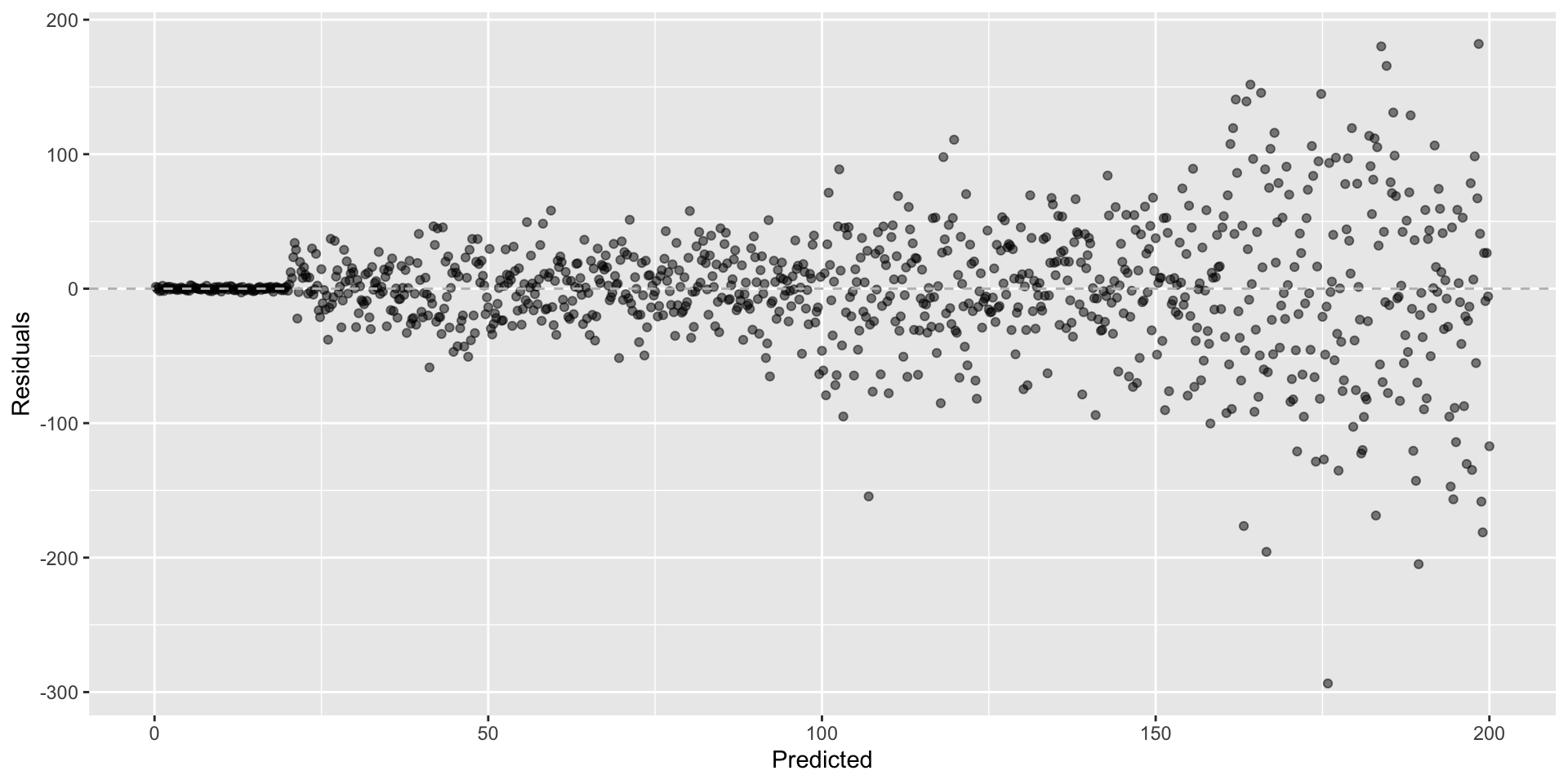

Fan shapes

Not looking for…

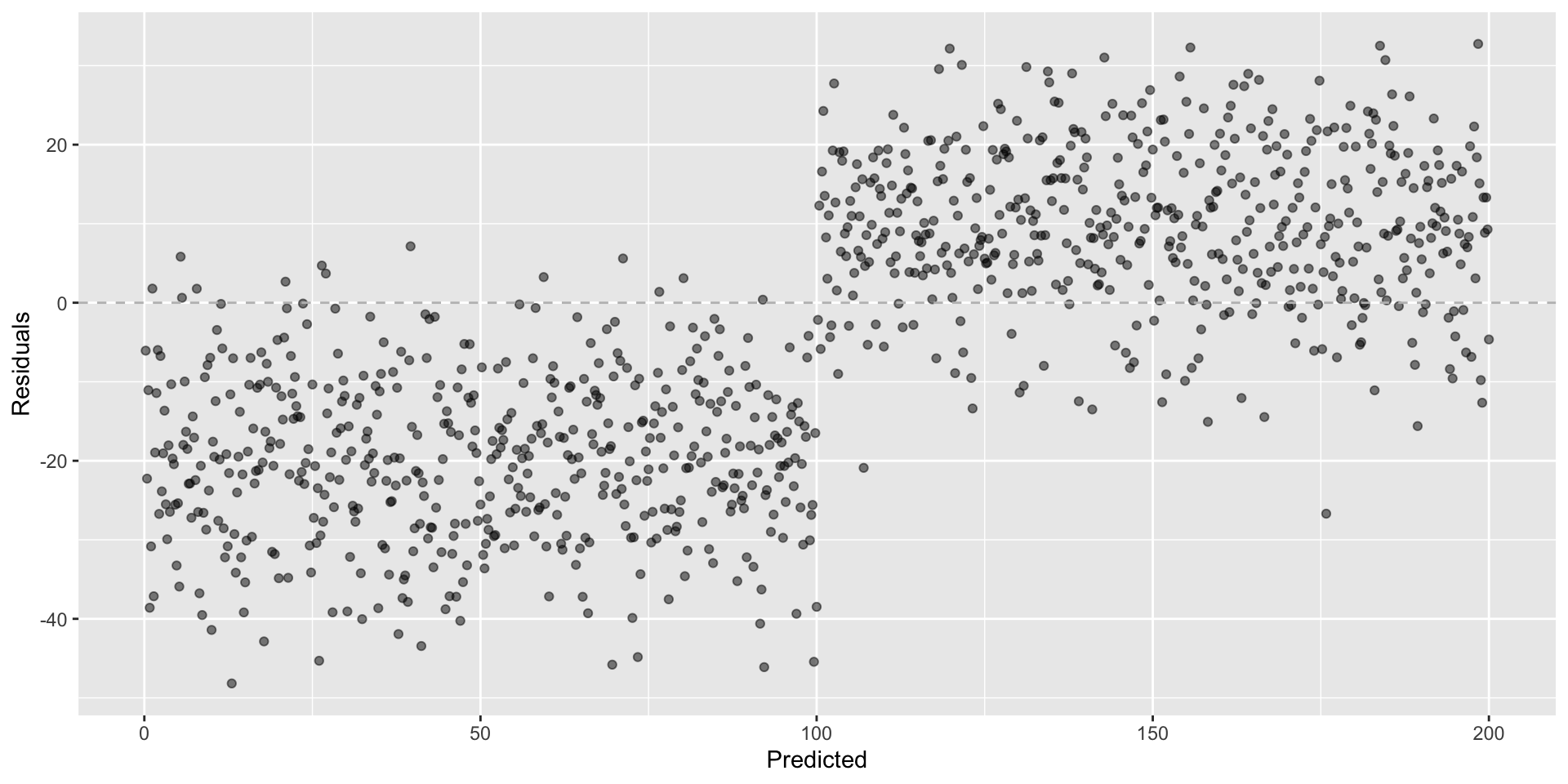

Groups of patterns

Not looking for…

Residuals correlated with predicted values

Not looking for…

Any patterns!

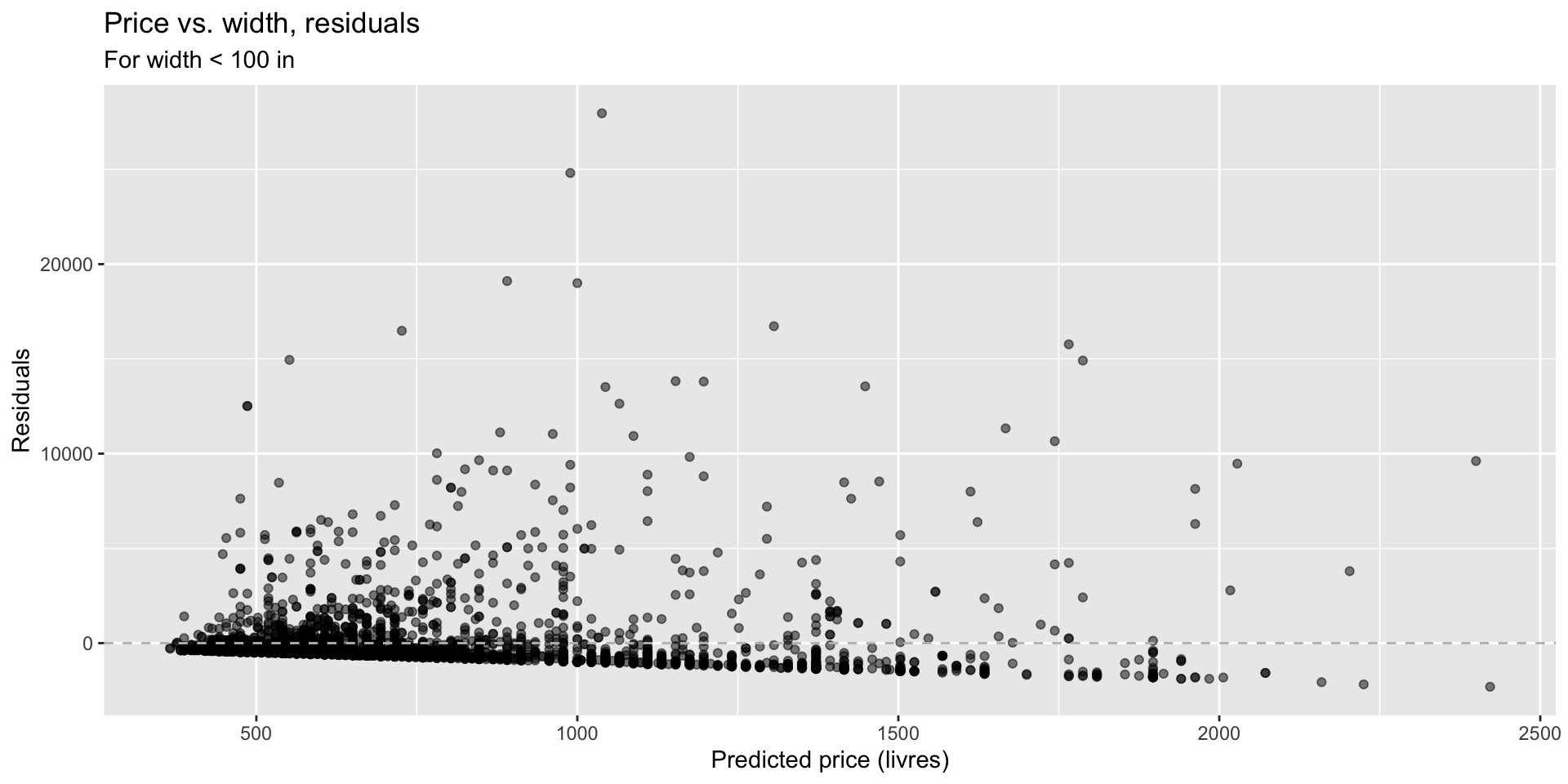

What patterns does the residuals plot reveal that should make us question whether a linear model is a good fit for modelling the relationship between height and width of paintings?

- Variability of residuals increases as predicted values increase.

Exploring linearity

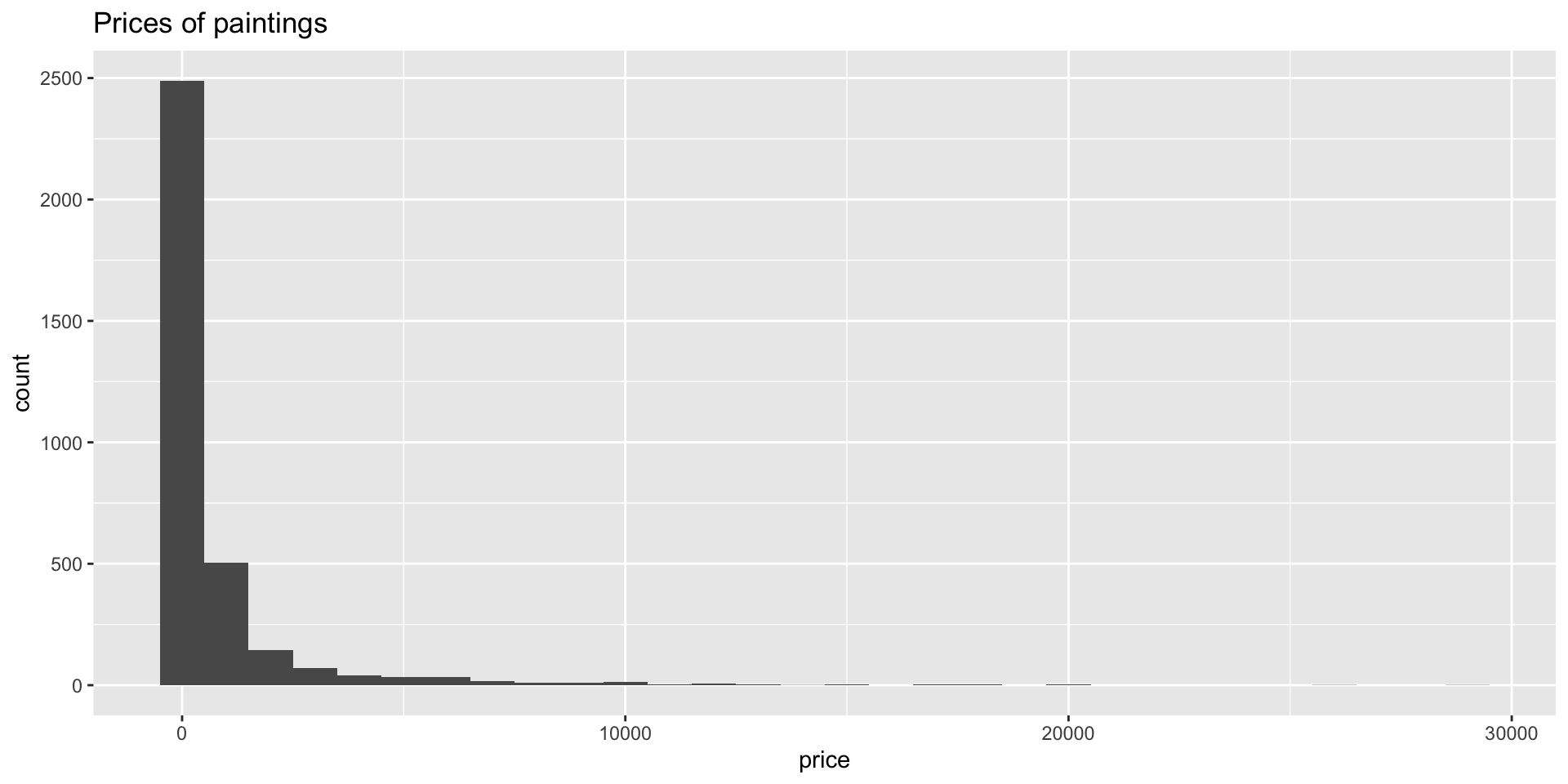

Data: Paris Paintings

- Skew?

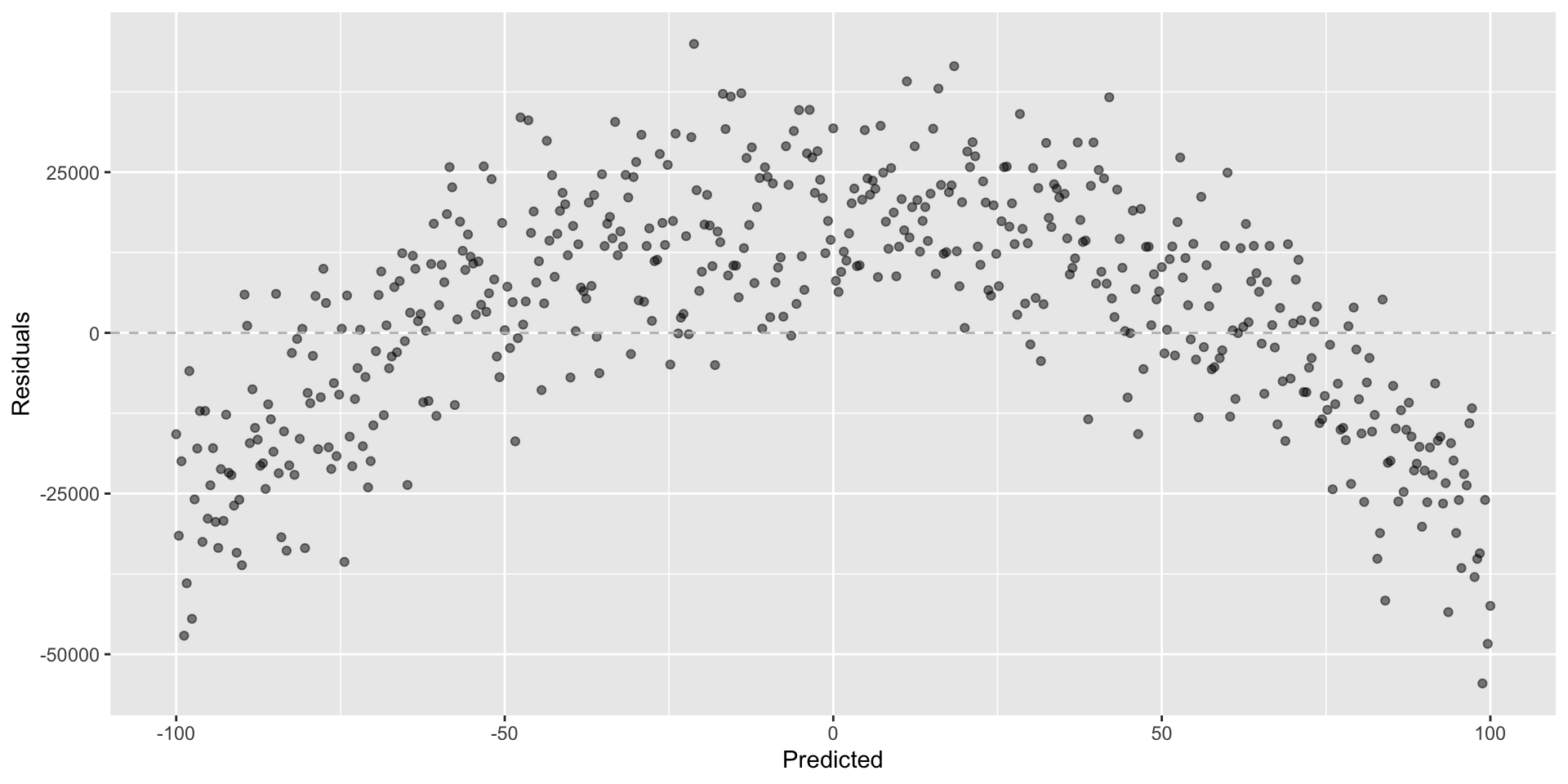

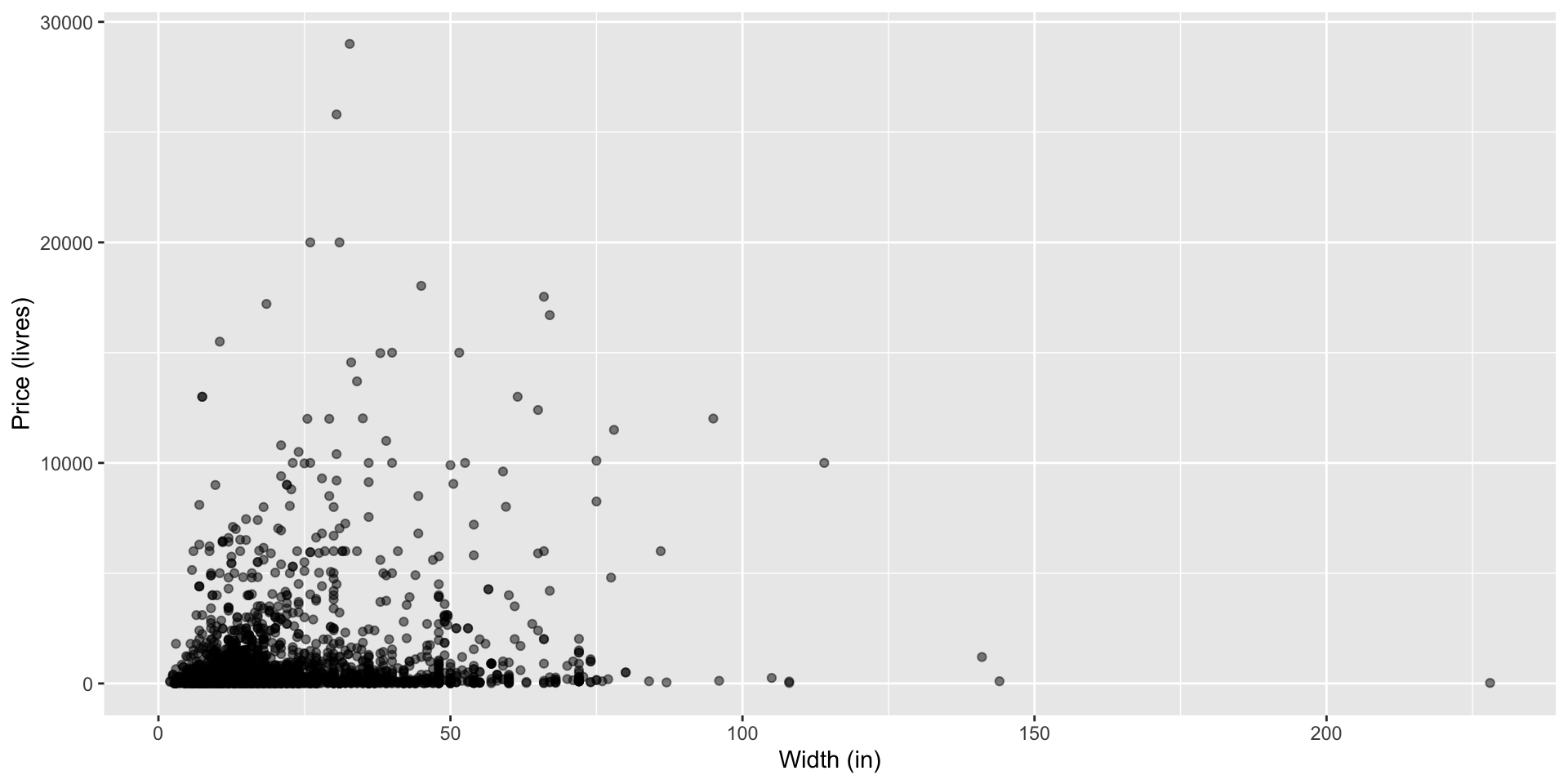

Price vs. width

- V-shape —> not linear

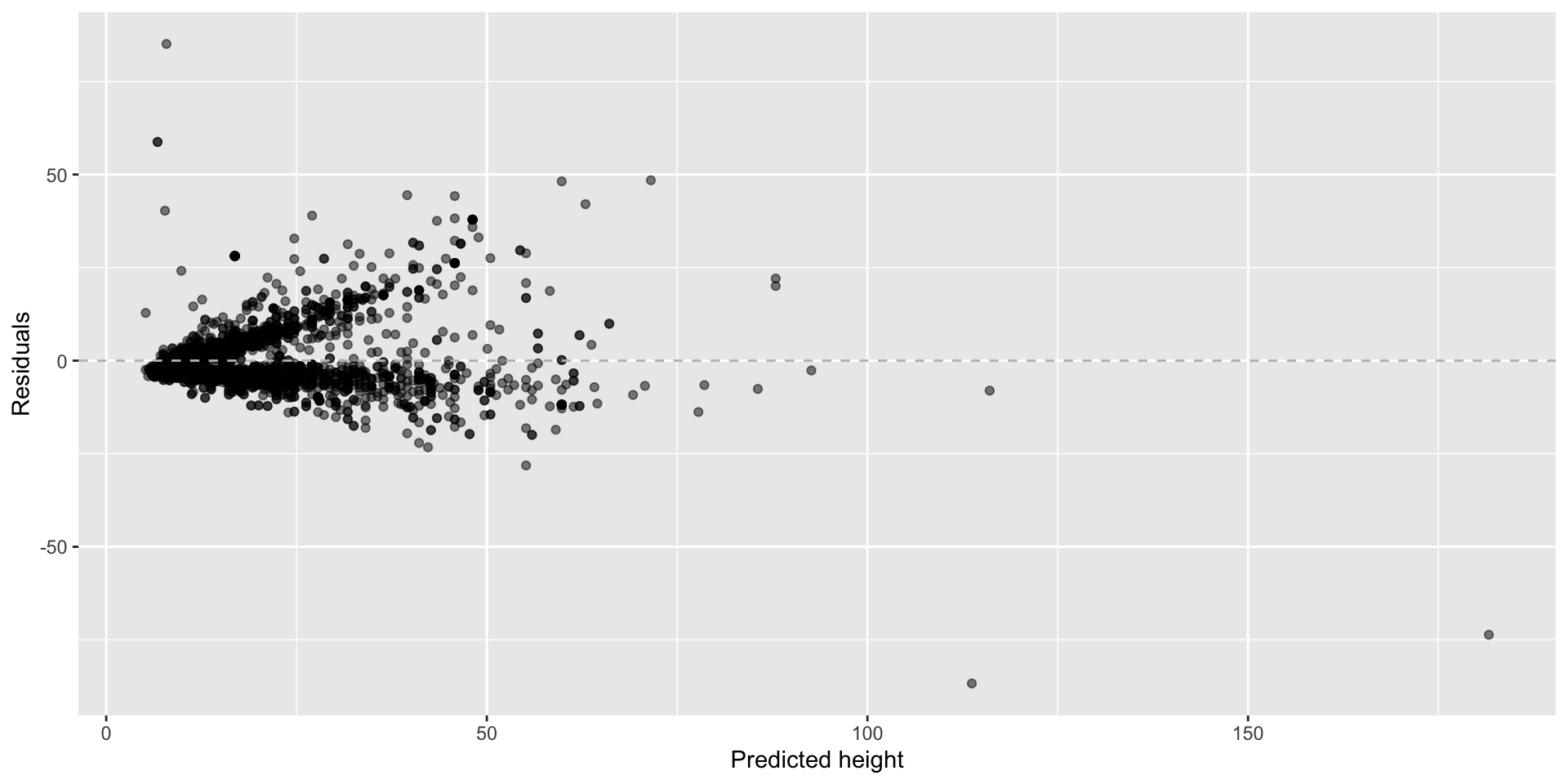

Focus on paintings with Width_in < 100 inches

That is, paintings with width < 2.54 meters

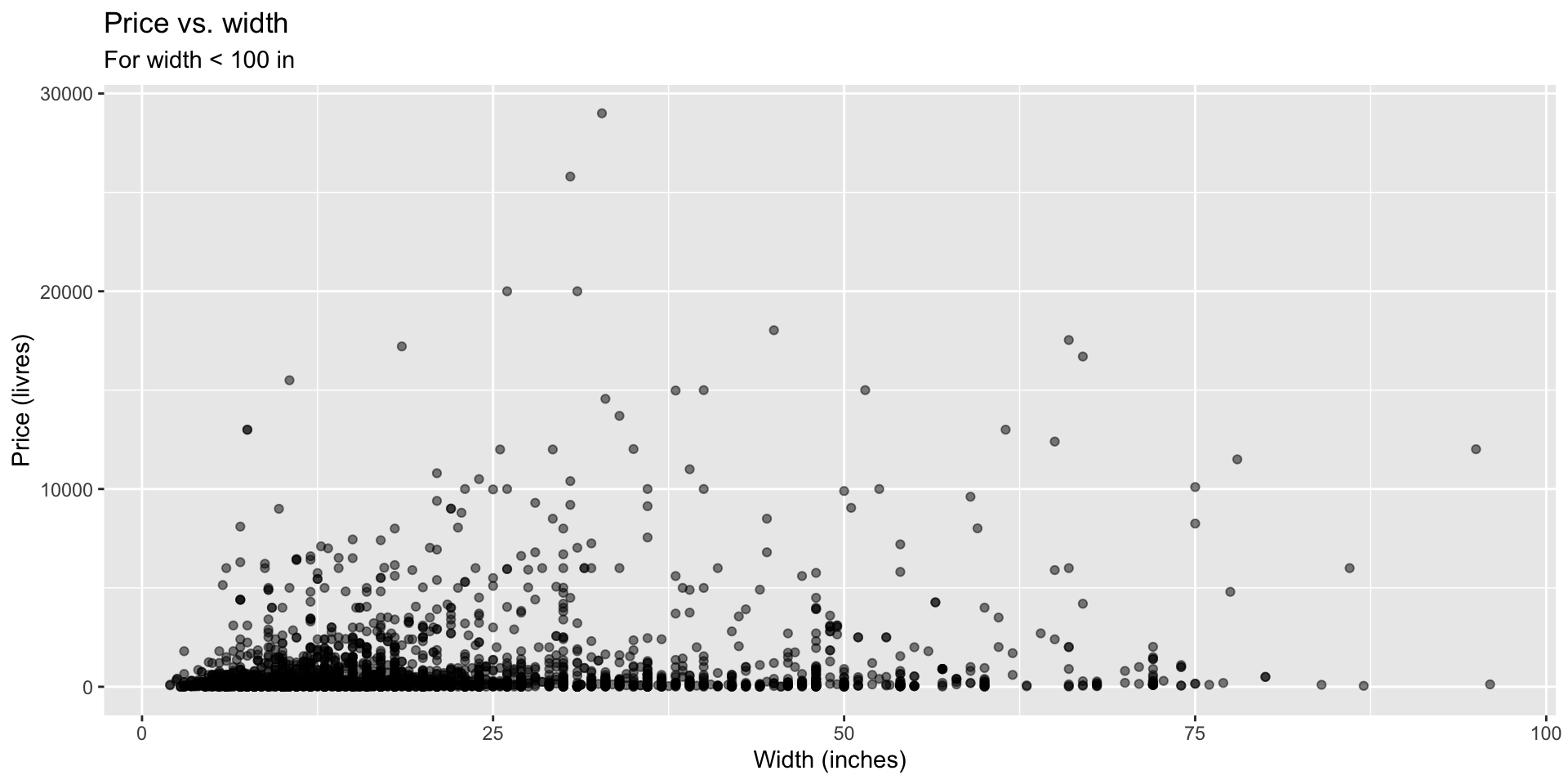

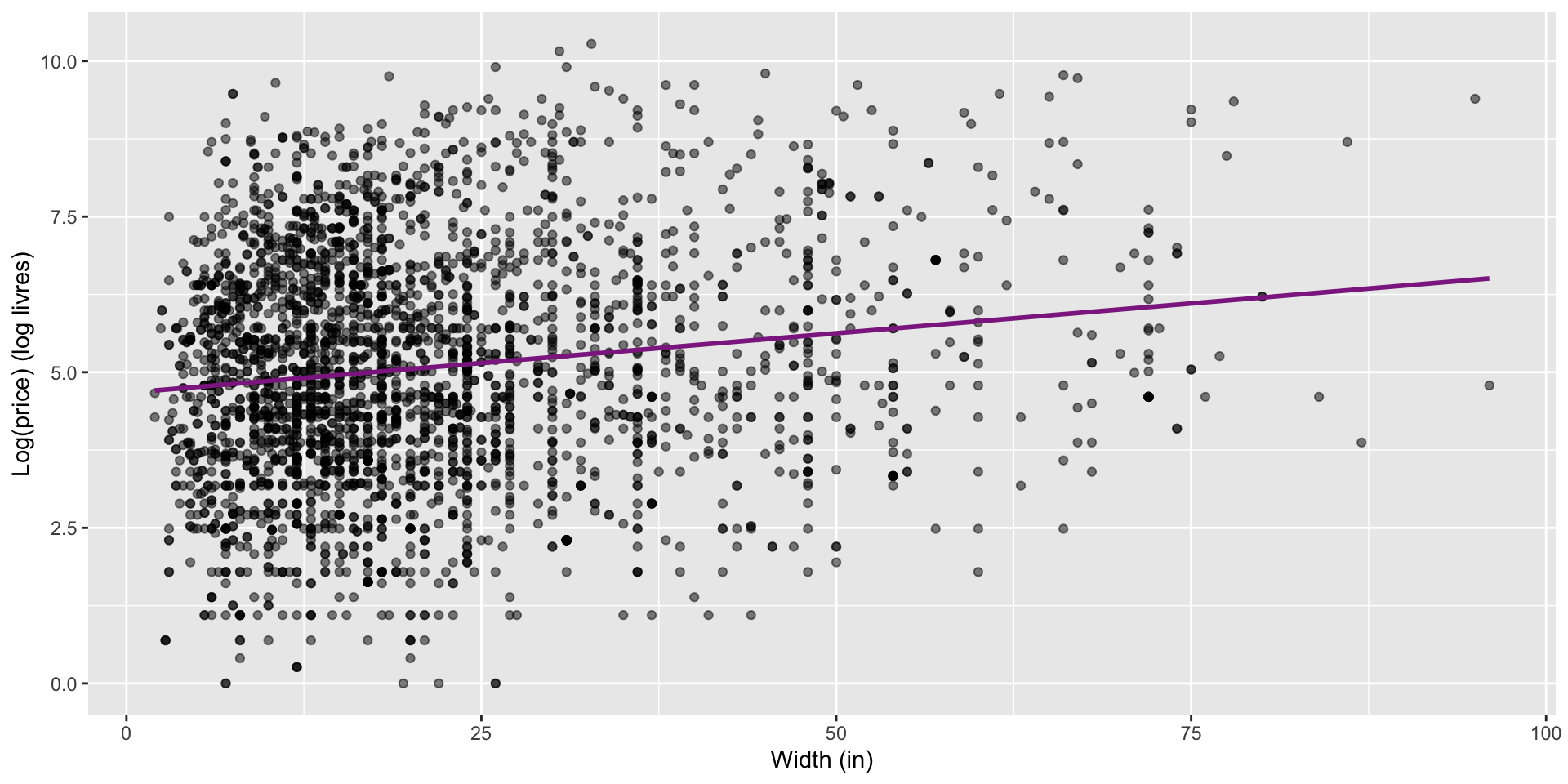

Price vs. width

Which plot shows a more linear relationship? Scatter, but…is it clearly linear or not? Do we see a band of data?

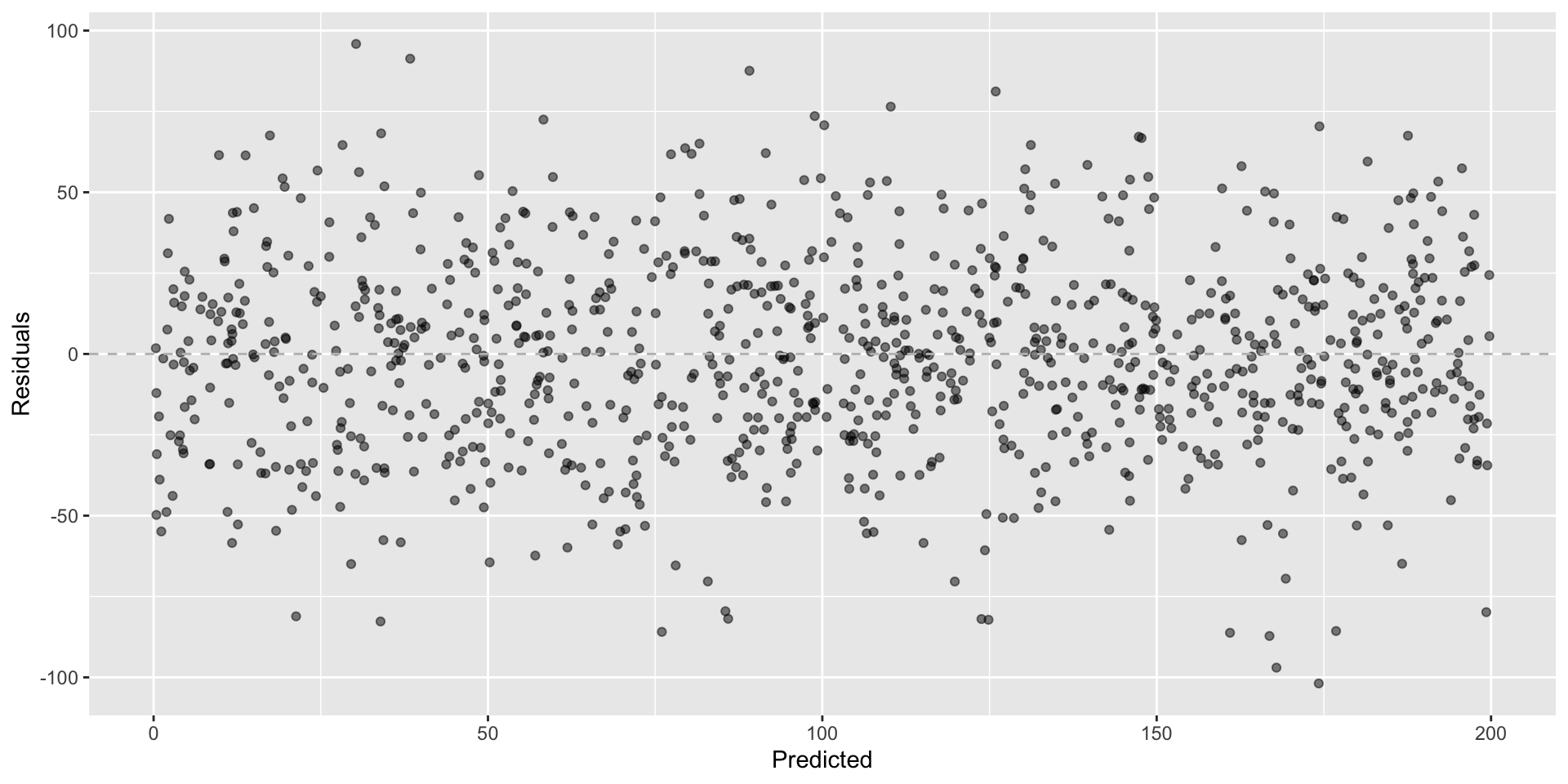

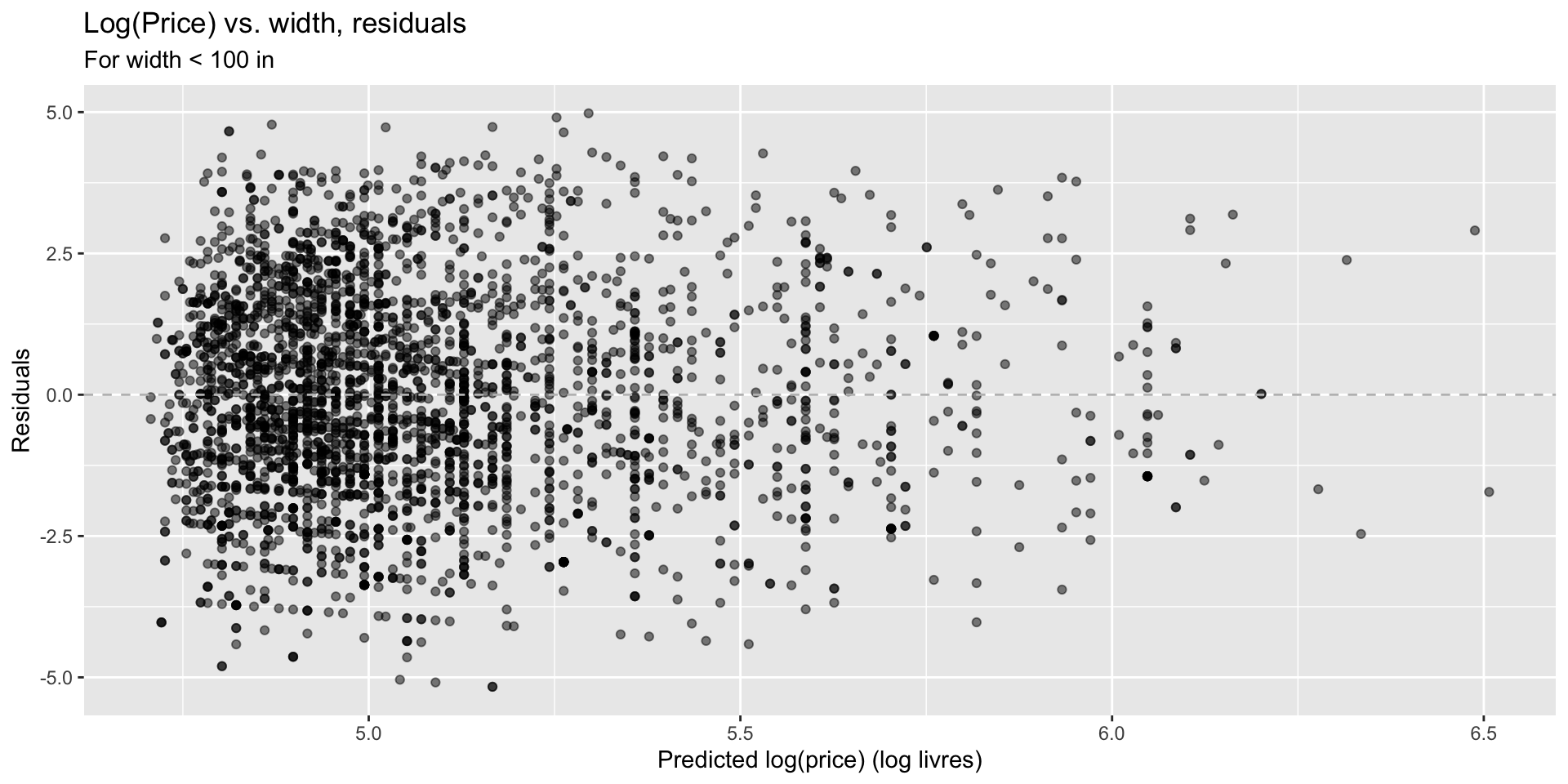

Price vs. width, residuals

Which plot shows residuals that are uncorrelated with predicted values from the model? Also, what is the unit of the residuals? Were w able to capture linear relationship?

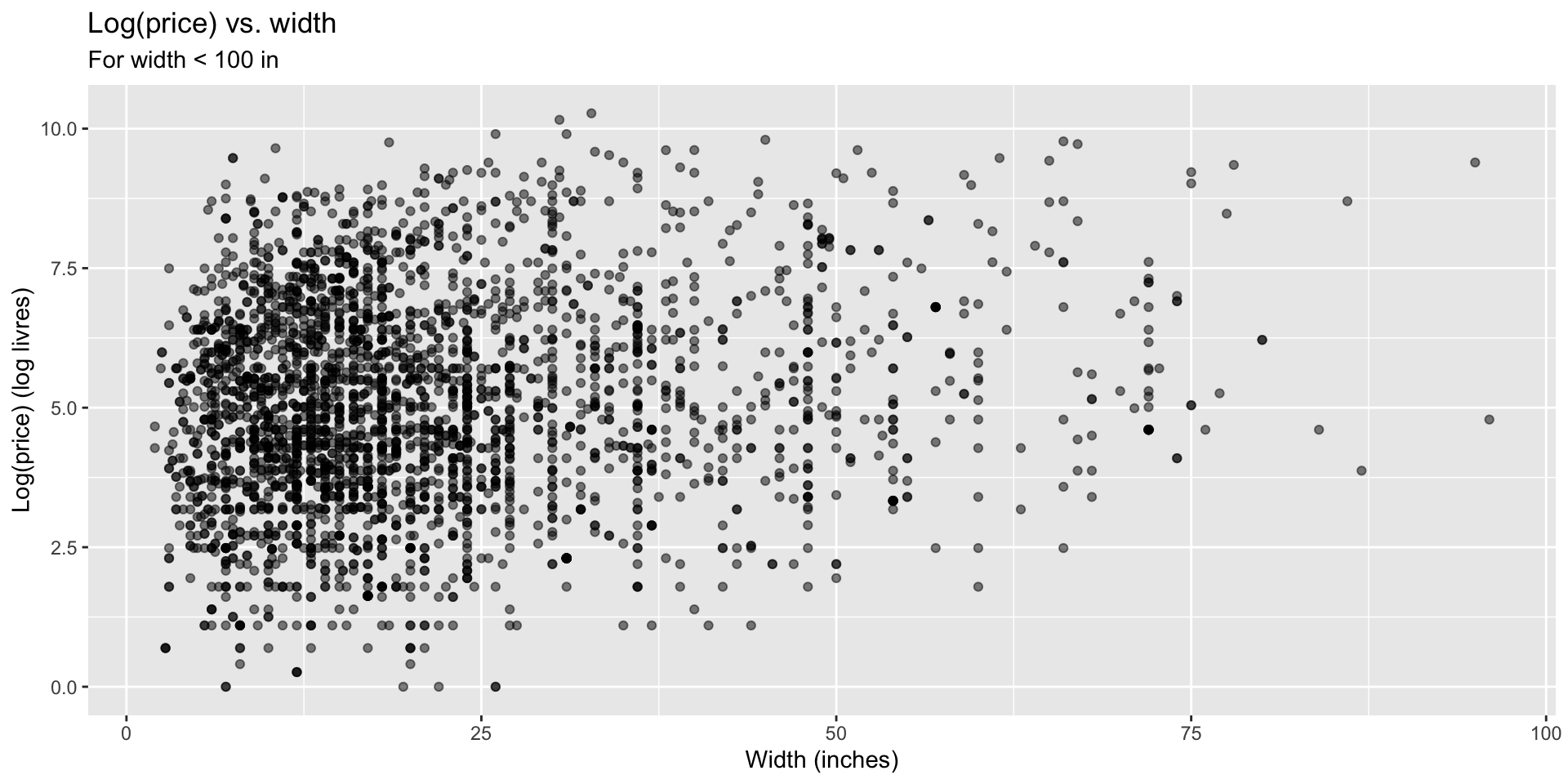

Transforming the data

- We saw that

pricehas a right-skewed distribution, and the relationship between price and width of painting is non-linear.

- In these situations a transformation applied to the response variable may be useful.

- In order to decide which transformation to use, examine the distribution of the response variable.

- Extremely right skewed distribution suggests log transformation may be useful.

- log = natural log, \(ln\)

- Default base of the

logfunction in R is the natural log:log(x, base = exp(1))

Logged price vs. width

How do we interpret the slope of this model?

Models with log transformation

Interpreting the slope

\[ \widehat{log(price)} = 4.67 + 0.0192 * Width \]

- For each additional inch the painting is wider, the log price of the painting is expected to be higher, on average, by 0.0192 livres.

- which is not a very useful statement…

Working with logs

- Subtraction and logs: \(log(a) − log(b) = log(a / b)\)

- Natural logarithm: \(e^{log(x)} = x\)

- We can use these identities to “undo” the log transformation

Interpreting the slope

The slope coefficient for the log transformed model is 0.0192, meaning the log price difference between paintings whose widths are one inch apart is predicted to be 0.0192 log livres.

Using this information, and properties of logs that we just reviewed, fill in the blanks in the following alternate interpretation of the slope:

For each additional inch of width, the price of the painting is expected to be

___(higher/lower), on average, by a factor of___.

Interpreting the slope

For each additional inch of width, the price of the painting is expected to be

___, on average, by a factor of___.

\[ log(\text{price for width x+1}) - log(\text{price for width x}) = 0.0192 \]

\[ log\left(\frac{\text{price for width x+1}}{\text{price for width x}}\right) = 0.0192 \]

\[ e^{log\left(\frac{\text{price for width x+1}}{\text{price for width x}}\right)} = e^{0.0192} \]

\[ \frac{\text{price for width x+1}}{\text{price for width x}} \approx 1.02 \]

For each additional inch the painting is wider, the price of the painting is expected to be higher, on average, by a factor of 1.02. Higher in price, if we are thinking $, by 2 cents.

Recap

- Non-constant variance is one of the most common model violations, however it is usually fixable by transforming the response (y) variable.

- The most common transformation when the response variable is right skewed is the log transform: \(log(y)\), especially useful when the response variable is (extremely) right skewed.

- This transformation is also useful for variance stabilization.

- When using a log transformation on the response variable the interpretation of the slope changes: “For each unit increase in x, y is expected on average to be higher/lower by a factor of \(e^{b_1}\).”

- Another useful transformation is the square root: \(\sqrt{y}\), especially useful when the response variable is counts.

Transform, or learn more?

- Data transformations may also be useful when the relationship is non-linear.

- However, in those cases a polynomial regression may be more appropriate

- This is beyond the scope of this course, but you might need this in the future if you keep doing data analytics